Johan De Gelas and crew present an interesting comparison of Dunnington, Shanghai, Istanbul and Nehalem in a new post at AnandTech this week. In the test line-up are the “top bin” parts from Intel and AMD in 4-core and 6-core incarnations:

- Intel Nehalem-EP Xeon, X5570 2.93GHz, 4-core, 8-thread

- Intel “Dunnington” Xeon, X7460, 2.66GHz, 6-core, 6-thread

- AMD “Shanghai” Opteron 2389/8389, 2.9GHz, 4-core, 4-thread

- AMD “Istanbul” Opteron 2435/8435, 2.6GHz, 6-core, 6-thread

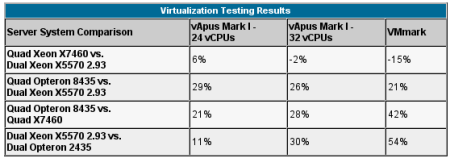

Most importantly for virtualization systems architects is how the vCPU scheduling affects “measured” performance. The telling piece comes from the difference in comparison results where vCPU scheduling is equalized:

When comparing the results, De Gelas hits on the I/O factor which chiefly separates VMmark from vAPUS:

The result is that VMmark with its huge number of VMs per server (up to 102 VMs!) places a lot of stress on the I/O systems. The reason for the Intel Xeon X5570’s crushing VMmark results cannot be explained by the processor architecture alone. One possible explanation may be that the VMDq (multiple queues and offloading of the virtual switch to the hardware) implementation of the Intel NICs is better than the Broadcom NICs that are typically found in the AMD based servers.

Johan De Gelas, AnandTech, Oct 2009

This is yet another issue that VMware architects struggle with in complex deployments. The latency in “Dunnington” is a huge contributor to its downfall and why the Penryn architecture was a dead-end. Combined with 8 additional threads in the 2P form factor, Nehalem delivers twice the number of hardware execution contexts than Shanghai, resulting in significant efficiencies for Nehalem where small working data sets are involved.

When larger sets are used – as in vAPUS – the Istanbul’s additional cores allows it to close the gap to within the clock speed difference of Nehalem (about 12%). In contrast to VMmark which implies a 3:2 advantage to Nehalem, the vAPUS results suggest a closer performance gap in more aggressive virtualization use cases.

SOLORI’s Take: We differ with De Gelas on the reduction in vAPUS’ data set to accommodate the “cheaper” memory build of the Nehalem system. While this offers some advantages in testing, it also diminishes one of Opteron’s greatest strengths: access to cheap and abundant memory. Here we have the testing conundrum: fit the test around the competitors or the competitors around the test. The former approach presents a bias on the “pure performance” aspect of the competitors, while the latter is more typical of use-case testing.

We do not construe this issue as intentional bias on AnandTech’s part, however it is another vector to consider in the evaluation of the results. De Gelas delivers a report worth reading in its entirety, and we view this as a primer to the issues that will define the first half of 2010.